“Those of us who watched Kids as adolescents,” writes Caroline Rothstein, in her Narrative.ly piece Legends Never Die, “Growing up in an era before iPhones, Facebook, and Tiger Moms, had our minds blown from wherever we were watching–whether it was the Angelika Film Center on the Lower East Side or our parents’ Midwestern basements. We were captivated by the entirely unsupervised teens smoking blunts, drinking forties, hooking up, running amok and reckless through the New York City streets…. Two decades after [the] film turned Washington Square skaters into international celebrities, the kids from ‘Kids’ struggle with lost lives, distant friendships, and the fine art of growing up.”

If you came up in the 90’s, you remember Kids. But I’d hardly given it a backward glance in ages. Had it really been two decades? It seemed somehow inconceivable. The cast, none of them professional actors, all plucked from the very streets they skated on, had become fixed in my mind as eternal teenagers, immortalizing a hyperbolized — and yet, not entirely foreign — experience. Kids was grotesque and dirty and self-indulgent and unignorable, and so was high school. Which is where I, and my friends, were at the time. The movie had become internalized. I had entirely forgotten that this was where Chloe Sevigny and Rosario Dawson had come from. Like a rite of passage, it seemed to carry a kind of continuity, like it was something everyone goes through. It seemed disconnected from any kind of evolving timeline.

And yet time had passed. Revisiting the lives of the cast 20 years later, Rothstein writes, “Justin Pierce, who played Casper, took his life in July 2000, the first of several tragedies for the kids. Harold, who played himself in the film and is best remembered for swinging his dick around in the pool scene—he was that kid who wasn’t afraid, who radiated a magnetic and infectious energy both on and off screen—is gone too. He died in February 2006 from a drug-induced heart attack.” Sevigny and Dawson have become successful actors. Others tied to the crew have gone on to lead the skate brand Zoo York, and start a foundation that aims to “use skateboarding as a vehicle to provide inner-city youth with valuable life experiences that nurture individual creativity, resourcefulness and the development of life skills.” But the most striking story for me, however, was of what happened over the past 20 years to the movie’s most profoundly central character:

“I think that Kids is probably the last time you see New York City for what it was on film,” [says, Jon “Jonny Boy” Abrahams.] “That is to me a seminal moment in New York history because right after that came the complete gentrification of Manhattan.”

Kids immortalizes a moment in New York City when worlds collided–“the end of lawless New York,” Eli [Morgan, co-founder of Zoo York] says–before skateboarding was hip, before Giuliani cleaned up, suited up, and wealthy-ed up Manhattan.

“I don’t think anyone else could have ever made that movie,” says Leo [Fitzpatrick, who played the main character, Telly]. “If you made that movie a year before or after it was made, it wouldn’t be the same movie.”

Kids‘ low-budget grit and amateur acting gave it a strange ambivalence. It was neither fully fictional nor fully real. It blurred the line between the two in a way that it itself did not quite fully understand — it was the very, very beginning of “post-Empire,” when such ambiguities would become common — and neither did we. Detached from the confines of the real and the fictional, it had a sense of also being out of time. But it turns out it was in fact the opposite. Kids was a time capsule. As Jessica [Forsyth] says in the article: “It’s almost like Kids was the dying breath of the old New York.”

It’s a strange thing. One day you wake up and discover that culture has become history. In the end it wasn’t a dramatic disaster or radical new technology that changed the narrative in an instant. It was a transition that happened gradually. The place stands still, and time revolves around it; changes it the way wind changes the topography of dunes.

Just a few days after Rothstein’s piece, I read these truly chilling words in The New York Times:

“The mean streets of the borough that rappers like the Notorious B.I.G. crowed about are now hipster havens, where cupcakes and organic kale rule.”

For current real estate purposes, the block where the Brooklyn rapper Notorious B.I.G., whose real name was Christopher Wallace, once sold crack is now well within the boundaries of swiftly gentrifying Clinton Hill, though it was at the edge of Bedford-Stuyvesant when he was growing up. Biggie, who was killed under still-mysterious circumstances in 1997, was just one of the many rappers to emerge from Brooklyn’s streets in the ’80s and ’90s. Including successful hardcore rappers, alternative hip-hop M.C.s, respected but obscure underground groups and some — like KRS-One and Gang Starr — who were arguably all of the above, the then-mean streets gave birth to an explosion of hip hop. Among the artists who lived in or hung out in this now gentrified corner of the borough: Not only Jay-Z, but also the Beastie Boys, Foxy Brown, Talib Kweli, Big Daddy Kane, Mos Def and L’il Kim.

For many, the word “Brooklyn” now evokes artisanal cheese rather than rap artists. The disconnect between brownstone Brooklyn’s past and present is jarring in the places where rappers grew up and boasted about surviving shootouts, but where cupcakes now reign. If you look hard enough, the rougher past might still be visible under the more recently applied gloss. And if you want to buy a piece of the action, Biggie’s childhood apartment, a three-bedroom walk-up, was recently listed by a division of Sotheby’s International Realty. Asking price: $725,000.

When we imagine the world of the future, it is invariably a world of science fiction. It’s always, “Here’s what Los Angeles might look like in seven years: swamped by a four-foot rise in sea level, California’s megalopolis of the future will be crisscrossed with a thousand miles of rail transportation. Abandoned freeways will function as waterslides while train passengers watch movies whiz by in a succession of horizontally synchronized digital screens. Foodies will imbibe 3-D-printed protein sculptures extruded by science-minded chefs.”

It’s always impersonal. The future, even one just seven years away, seems always inhabited entirely by future-people. It’s not a place where we actually imagine….ourselves. Who will we be when the music that speaks to us now becomes “Classic” (Attention deficit break: “Elders react to Skrillex“); when the movies or TV shows or — lets be real, it’s most likely going to be — web content that captures the spirit of this moment becomes a time capsule instead of a reflection? When once counter-cultural expressions — like skating, or hip hop — become mainstream? Who will we be when there is no longer a mainstream, or a counter-culture, for that matter? And who will the teenagers of this future be when the culture of their youth ages?

The past isn’t a foreign country. It’s our hometown. It’s the place we left, that has become immortalized in our memory the way it was back then. We return one day to discover new buildings have sprung up in empty lots, new people have moved in and displaced the original residents. Some from the old neighborhood didn’t made it out alive. The past has moved while we weren’t looking. It’s no longer where it was at all.

“In the ’80s and ’90s–as strange as it may seem to say this–we had such luxury of stability,” William Gibson, the once science-fiction writer who popularized the word “cyberspace,” and turned natural realist novelist in the 21st-century, said in a 2007 interview. “Things weren’t changing quite so quickly in the ’80s and ’90s. And when things are changing too quickly you don’t have any place to stand from which to imagine a very elaborate future.”

Yet this week, it seems to me the more mysterious our future, the more the past becomes a moving target.

Then again, perhaps it always was.

Strange memories on this nervous night in Las Vegas. Five years later? Six? It seems like a lifetime, or at least a Main Era—the kind of peak that never comes again. San Francisco in the middle sixties was a very special time and place to be a part of. Maybe it meant something. Maybe not, in the long run… but no explanation, no mix of words or music or memories can touch that sense of knowing that you were there and alive in that corner of time and the world. Whatever it meant.…

History is hard to know, because of all the hired bullshit, but even without being sure of “history” it seems entirely reasonable to think that every now and then the energy of a whole generation comes to a head in a long fine flash, for reasons that nobody really understands at the time—and which never explain, in retrospect, what actually happened.

There was madness in any direction, at any hour. You could strike sparks anywhere. There was a fantastic universal sense that whatever we were doing was right, that we were winning.… We had all the momentum; we were riding the crest of a high and beautiful wave.…

So now, you can go up on a steep hill in Las Vegas and look West, and with the right kind of eyes you can almost see the high-water mark—that place where the wave finally broke and rolled back.”

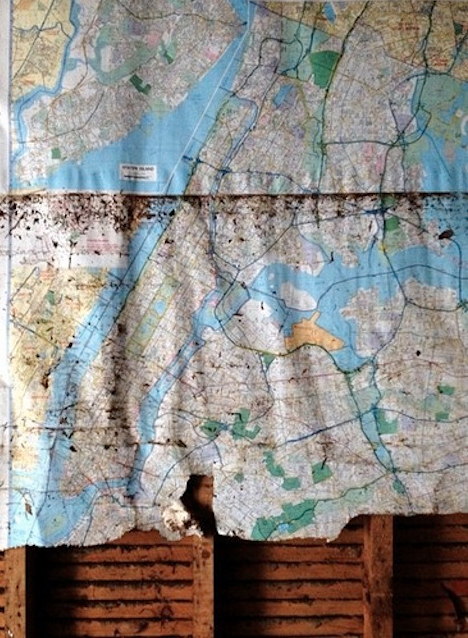

Map of New York City showing the remnants of the 6ft high water line from Hurricane Sandy.

Crom Martial Training, Rockaway Beach. (Source)